Xiaopeng LiAI researcher with ten years of experience. Primarily interested in Large Language Models training, Reinforcement Learning from Human Feedback, Natural Language/Code Generation, LLM Agent, Retrieval Augmented Generation, etc.

About me

I am currently a Senior Applied Scientist in Amazon AWS. I completed my Ph.D. at the Hong Kong University of Science and Technology in 2019. I have worked primarily on large language models and generative AI. As a science lead, I have initiated and successfully launched two products: Amazon CodeWhisperer and Amazon Q in IDE. I am passionate about doing original and impactful research in AI and ML, and enjoy working with smart people on exciting projects. I like to create proof-of-concepts and new products with the latest technologies.

I think learning, exploration and creation are life-time endeavours.

My experience

Senior Applied Scientist

Seattle, WA

Initiator and science lead for Amazon Q in IDE project. Worked on developing chat model, RLHF and agent for code generation

2022 - presentApplied Scientist

Seattle, WA

Founding member of CodeWhisperer team. Led the pretraining and finetuning of code LLMs with tens of billion parameters since 2020

2019 - 2022Intern at Google Cloud AI

Sunnyvale, CA

Worked on AutoML Recommendations with Google Brain and Cloud AI team

2018PhD Student at the Hong Kong University of Science and Technology

Hong Kong, China

PhD in Computer Science and Engineering

2014 - 2019Research

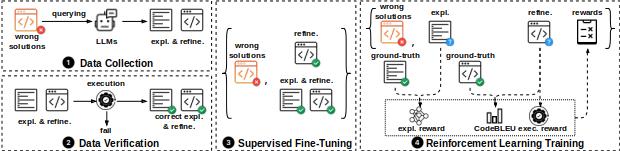

Training LLMs to Better Self-Debug and Explain Code - preprint 2024

Nan Jiang, Xiaopeng Li et al.

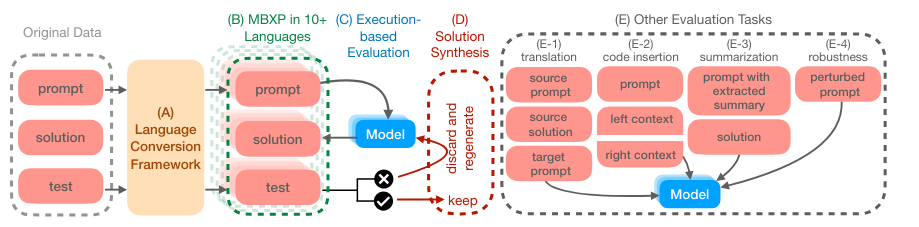

Multi-lingual Evaluation of code-generation model - ICLR 2023 (spotlight)

Ben Athiwaratkun, Sanjay Krishna Gouda, Zijian Wang, Xiaopeng Li, et al.

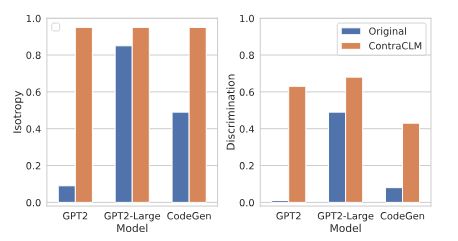

CONTRACLM: Contrastive Learning For Causal Language Model - ACL 2023

Nihal Jain, Dejiao Zhang, Wasi Uddin Ahmad, Zijian Wang, Feng Nan, Xiaopeng Li, et al.

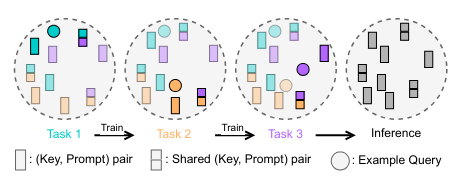

Exploring Continual Learning for Code Generation Models - ACL 2023

Prateek Yadav, Qing Sun, Hantian Ding, Xiaopeng Li, et al.

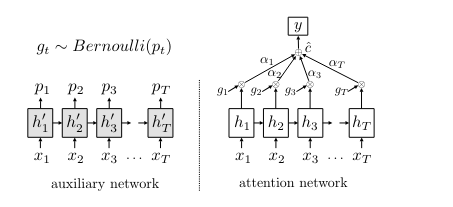

Not All Attention Is Needed: Gated Attention Network for Sequence Data - AAAI 2020

Lanqing Xue, Xiaopeng Li, Nevin L. Zhang

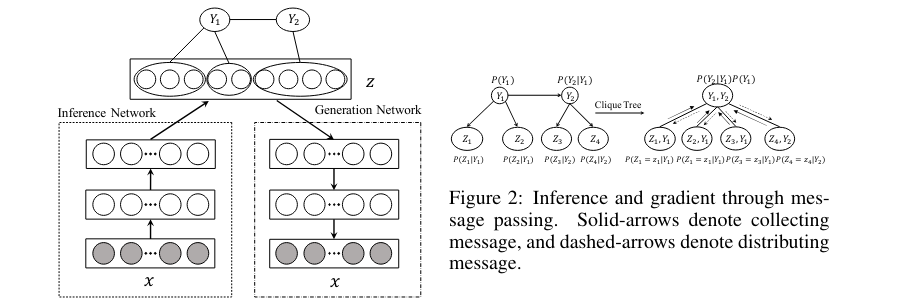

Learning Latent Superstructures in Variational Autoencoders for Deep Multidimensional Clustering - ICLR 2019

Xiaopeng Li et al.

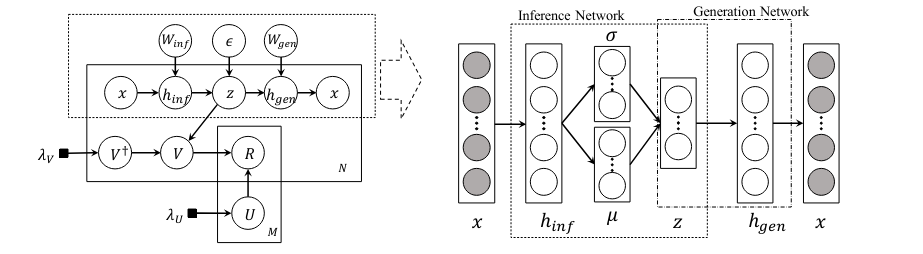

Collaborative Variational Autoencoder for Recommender Systems - KDD 2017

Xiaopeng Li and James She.

Demo

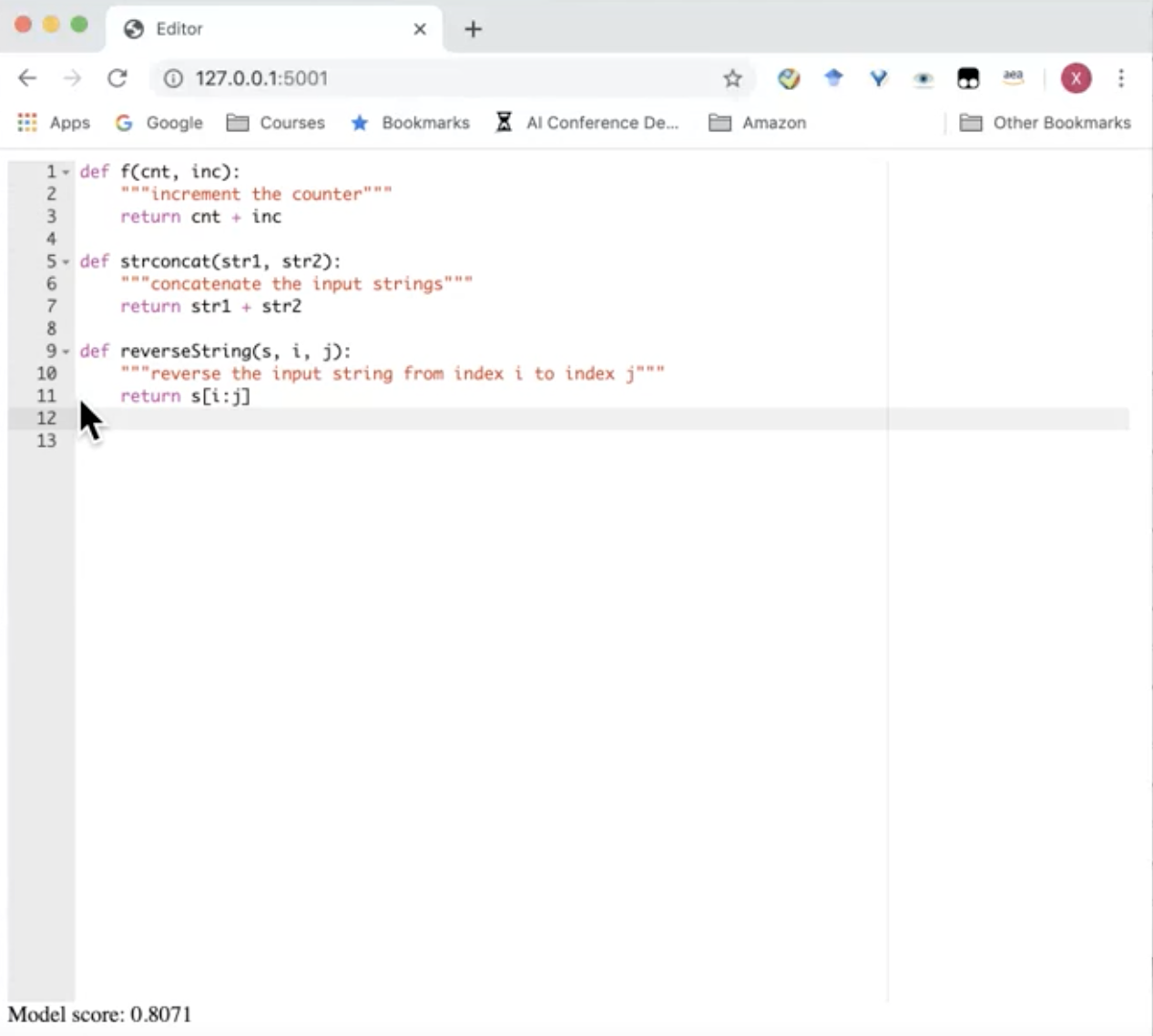

Code AutoCompletion with LLM

I pretrained a code GPT model in Aug 2020 for code generation, and created a interactive demo for code completion in IDE. Very primitive, but it was 2020 way before LLM surge.